|

Open Qmin

0.8.0

GPU-accelerated Q-tensor-based liquid crystal simulations

|

|

Open Qmin

0.8.0

GPU-accelerated Q-tensor-based liquid crystal simulations

|

Macros | |

| #define | utilities_CUH__ |

Functions | |

| template<typename T > | |

| bool | gpu_set_array (T *arr, T value, int N, int maxBlockSize=512) |

| set every element of an array to the specified value More... | |

| bool | gpu_dot_dVec_vectors (dVec *d_vec1, dVec *d_vec2, scalar *d_ans, int N) |

| (scalar) ans = (dVec) vec1 . vec2 More... | |

| bool | gpu_dVec_times_scalar (dVec *d_vec1, scalar factor, int N) |

| (dVec) input *= factor More... | |

| bool | gpu_dVec_times_scalar (dVec *d_vec1, scalar factor, dVec *d_ans, int N) |

| (dVec) ans = input * factor More... | |

| bool | gpu_scalar_times_dVec_squared (dVec *d_vec1, scalar *d_scalars, scalar factor, scalar *d_answer, int N) |

| ans = a*b[i]*c[i]^2r More... | |

| bool | gpu_dVec_plusEqual_dVec (dVec *d_vec1, dVec *d_vec2, scalar factor, int N, int maxBlockSize=512) |

| vec1 += a*vec2 More... | |

| bool | gpu_serial_reduction (scalar *array, scalar *output, int helperIdx, int N) |

| A trivial reduction of an array by one thread in serial. Think before you use this. More... | |

| bool | gpu_parallel_reduction (scalar *input, scalar *intermediate, scalar *output, int helperIdx, int N, int block_size) |

| A straightforward two-step parallel reduction algorithm with block_size declared. More... | |

| bool | gpu_dVec_dot_products (dVec *input1, dVec *input2, scalar *output, int helperIdx, int N) |

| Take two vectors of dVecs and compute the sum of the dot products between them using thrust. More... | |

| bool | gpu_dVec_dot_products (dVec *input1, dVec *input2, scalar *intermediate, scalar *intermediate2, scalar *output, int helperIdx, int N, int block_size) |

| Take two vectors of dVecs and compute the sum of the dot products between them. More... | |

| scalar | gpu_gpuarray_dVec_dot_products (GPUArray< dVec > &input1, GPUArray< dVec > &input2, GPUArray< scalar > &intermediate, GPUArray< scalar > &intermediate2, int N=0, int maxBlockSize=512) |

| A function of convenience: take the gpuarrays themselves and dot the data. More... | |

| template<class T > | |

| void | reduce (int size, int threads, int blocks, T *d_idata, T *d_odata) |

| access cuda sdk reduction6 More... | |

| template<class T > | |

| T | gpuReduction (int n, int numThreads, int numBlocks, int maxThreads, int maxBlocks, T *d_idata, T *d_odata) |

| like benchmarkReduce, interfaces with reduce and returns result More... | |

| template<typename T > | |

| bool | gpu_copy_gpuarray (GPUArray< T > ©Into, GPUArray< T > ©From, int block_size=512) |

| copy data into target on the device More... | |

| scalar | host_dVec_dot_products (dVec *input1, dVec *input2, int N) |

| Take two vectors of dVecs and compute the sum of the dot products between them on the host. More... | |

| void | host_dVec_plusEqual_dVec (dVec *d_vec1, dVec *d_vec2, scalar factor, int N) |

| vec1 += a*vec2... on the host! More... | |

| void | host_dVec_times_scalar (dVec *d_vec1, scalar factor, dVec *d_ans, int N) |

| (dVec) ans = input * factor... on the host More... | |

| unsigned int | nextPow2 (unsigned int x) |

| void | getNumBlocksAndThreads (int n, int maxBlocks, int maxThreads, int &blocks, int &threads) |

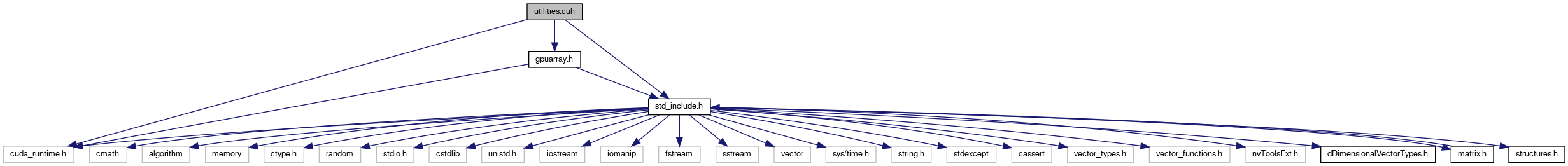

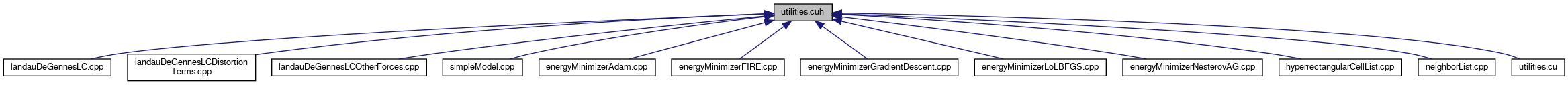

A file providing an interface to the relevant cuda calls for some simple GPU array manipulations

| #define utilities_CUH__ |

1.8.15

1.8.15